.jpg)

Table of Content

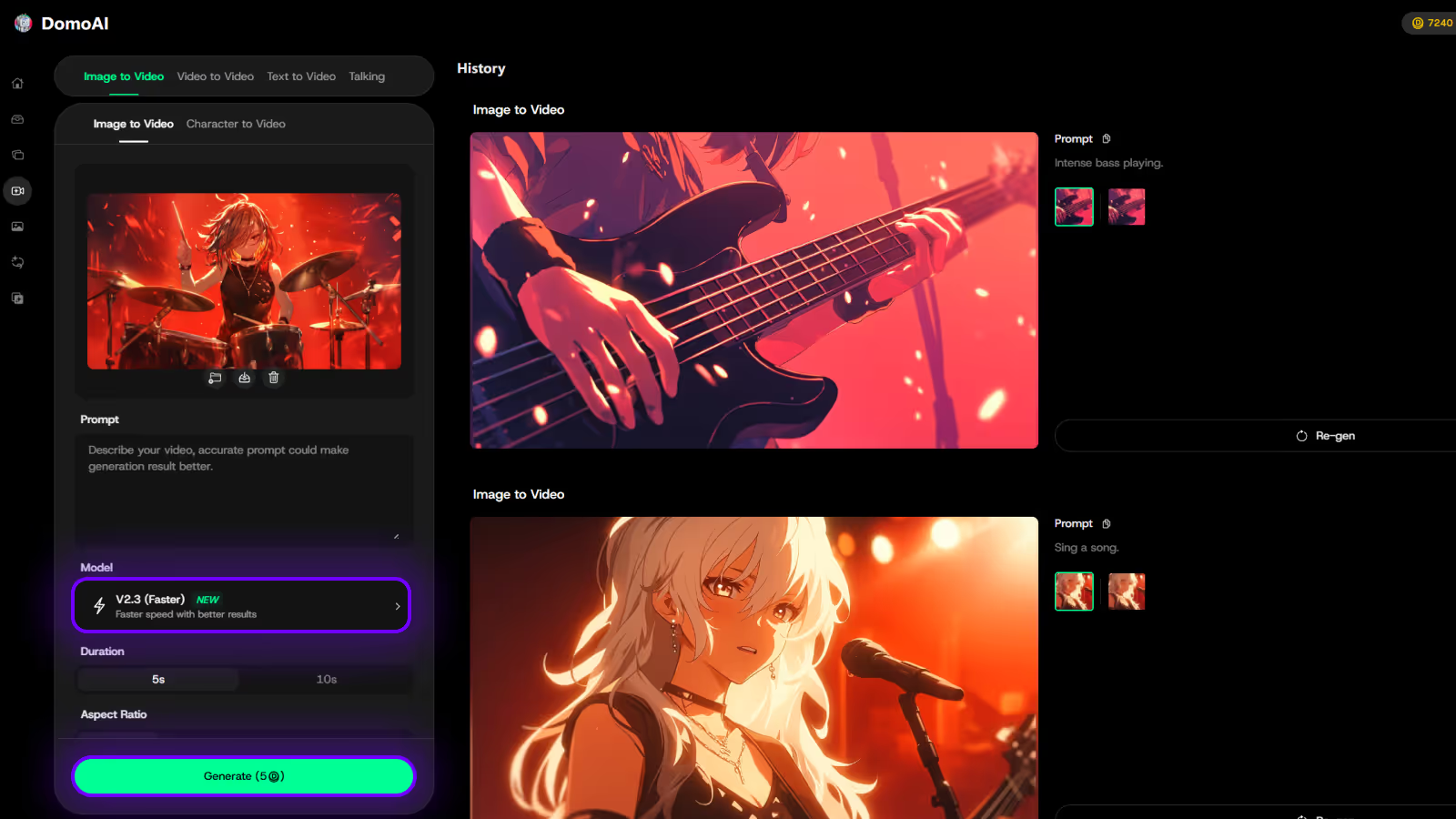

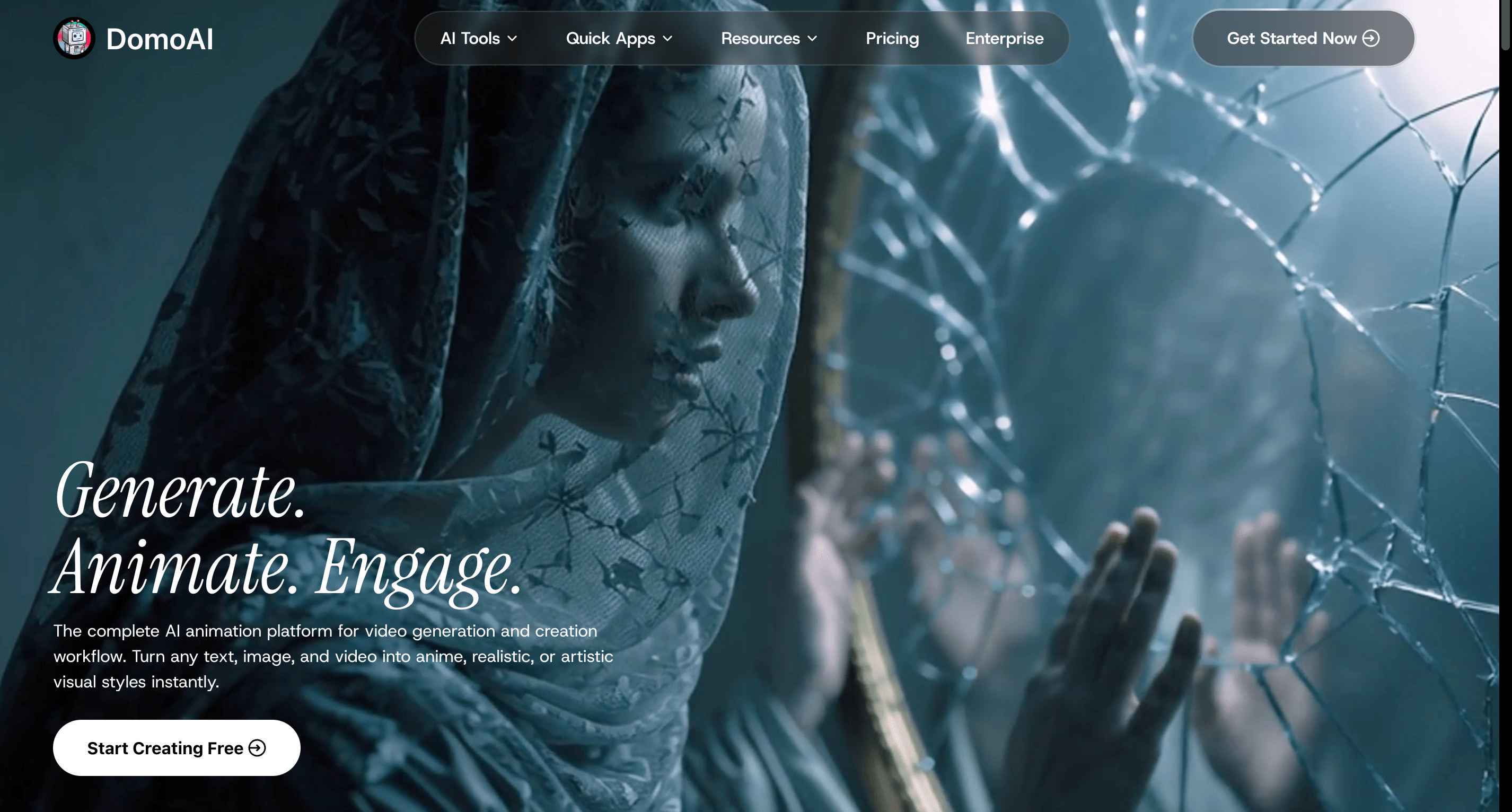

Try DomoAI, the Best AI Animation Generator

Turn any text, image, or video into anime, realistic, or artistic videos. Over 30 unique styles available.

You are juggling a script, a stack of raw clips, and a hard deadline inside Veo 3, sound familiar? Pollo AI has become a common choice there for AI-driven video creation, using machine learning for text-to-video, voice cloning, and automatic editing to speed work for content creators. Which tools deliver better quality, more control, or lower cost for your projects? This article maps the best Pollo AI alternatives for AI video creation so you can compare options and choose what fits your workflow and goals.

To help with that, DomoAI offers an AI animation generator that turns scripts into polished videos with ready-made templates, automated cuts, and simple export options so you can test alternatives and move faster.

For creators comparing Pollo AI alternatives, DomoAI’s AI Lip Sync Video Generator adds another layer of polish by matching animated characters or footage with natural, synchronized speech.

Pollo AI’s free plan gives you 20 credits. That usually buys two short videos or a couple of quick tests. Casual creators and teams exploring an AI video generator want to iterate more than that.

When the free trial ends, the next step is a paid plan or a credit top-up, which blocks many who just want to play with text-to-video features, avatar generation, or style transfer. Want to keep testing without emptying your wallet?

Users praise Pollo.ai for its generative AI and creative effects, yet output quality can swing between striking and generic. Lower model tiers or tight prompt constraints often yield inconsistent visuals.

Prompt engineering helps, but it adds friction for people who expect reliable results on every render. If you plan a client project, how do you manage when model tiers produce different finishes?

Pollo AI enforces content moderation and NSFW filtering. Some creators and Reddit threads report that the filters block mature themes or nudity, which they view as creative censorship. Trustpilot reviews echo frustration when a filter prevents a requested concept.

Platforms have to balance safety and freedom, but creators who need looser rules look elsewhere for fewer limits on subject matter and adult content.

Credits determine video length, model choice, and special features. Without a preview option, users often burn credits on outputs that don't meet expectations. That makes budgeting unpredictable for short videos and longer pieces alike.

Subscription tiers and pay-as-you-go structures can be confusing, and customers report wasted credits and surprise costs on Trustpilot. What happens when a client asks for a 60-second piece and you cannot estimate the credit spend?

Price flexibility, consistent video generation quality, clearer credit systems, and fewer creative restrictions drive users away. Teams want predictable renders across model tiers, a preview or lower cost proofing pass, transparent pricing, and options for longer videos or custom style control.

Many compare review threads on Reddit and Trustpilot before switching. Which of these trade-offs matters most to your workflow?

Choose a platform that does more than convert images into short clips. Look for text-to-image, text-to-video, and direct video editing in the same app so you can prototype an idea with text, refine frames by hand, and export a timeline for final touch-ups. Check for templates, style transfer, and live previews so you can change direction fast without starting over.

A good alternative will let you upload images, footage, and clean audio, and then work on them deeply. Expect masking, layering, chroma key, motion tracking, and frame-by-frame keyframes, rather than just trims and filters.

Find editors that support alpha channels, multi-track timelines, and nondestructive edits so your source files stay editable as you iterate.

Inspect pricing pages, subscription defaults, and trial limits before you commit a card. Confirm whether plans default to annual billing, what overage charges look like, and how the vendor handles refunds and disputes.

Ask for example invoices and cancellation steps so you can verify charges match what the dashboard shows.

Need quick help with a broken render or a billing dispute? Evaluate response channels: email, live chat, phone, and an active forum with moderated threads. Look for documented service level targets, clear ticket IDs, and a knowledge base with troubleshooting guides and video walkthroughs so you can get unstuck fast.

Test how the AI handles motion, facial fidelity, and delicate texture at your target resolution and frame rate. Watch for smooth animation over time, consistent lip sync for talking avatars, and high detail when you upscale to 1080p or 4K.

Run a sample project to measure render times, GPU acceleration, batch processing, and whether results arrive without artifacts across frames.

Pick a tool that exports in multiple formats and plugs into editors and automation. Does it export ProRes, MOV, and MP4? Does it connect via API, webhooks, Zapier, or have direct export to Adobe Premiere or After Effects?

Check for team accounts, role permissions, single sign-on, and cloud storage links so you can slot the tool into your production pipeline.

Creating cool videos used to mean hours of editing and lots of technical know-how, but DomoAI's AI video editor changes that entirely and lets you turn photos into moving clips, make videos look like anime, or create talking avatars just by typing what you want. Create your first video for free with DomoAI today!

DomoAI gives creators an escape from strict credit limits and uneven output that some video generators impose. It converts text, images, and video into anime, realistic, or artistic visuals in seconds while letting you switch styles on the fly. Features include multi-style generation via style transfer or reference media, automated background removal, lip syncing, motion control, and AI upscaling that reduce editing time.

Upload a dance clip or a style reference, and the system replicates motion and look in your footage. It also supports talking avatars and character animation with automatic speech-to-lip movement alignment.

Creators who want a broader style range than Pollo AI offers. Businesses and influencers are producing social media content at scale. Animators who need quick, polished character pieces.

Community templates and remixable styles keep creativity open and prevent running out of usable options. Innovative editing tools replace many manual tasks and support faster turnaround for short-form and long-form content. Over 3 million creators are already generating viral content with DomoAI.

Kling AI runs on new models like Kling and Kolors to power text-to-video and image-to-video creation. The interface encourages exploration: you can scan many public projects, remix them with one click, or team up with experienced AI artists to refine ideas. It supports both total original generation and crowd-inspired remix workflows.

The platform makes experimentation easy. You can:

It is strong for creators who want to mix styles or evolve work through shared inputs.

Sora is OpenAI’s text-to-video model for clips up to one minute. It uses diffusion transformer methods to generate realistic and imaginative scenes and keeps character and visual continuity across multiple shots within the same output.

The model offers deep linguistic and physical awareness, so motion, camera framing, and object interactions remain coherent.

Film production previsualization, educational clips, marketing assets, and any creator who needs fast one-minute outputs with consistent characters and shot transitions. Sora aims to reduce the time from script to shareable video.

Runway focuses on live collaboration and next-generation AI effects. Its Gen 3 Alpha model generates hyper-real, fully editable videos from plain text inputs. Editors can apply AI-driven impacts in real time, tweak frames, and keep full creative control over layers and masks.

Runway moves heavy lifting into AI while keeping manual control where you want it. Teams can iterate quickly, apply advanced effects, and export pro-ready assets without rebuilding scenes from scratch.

Vidu excels at 2D line art and fast motion scenes. The system interprets sparse prompts and produces clear, high-performance outputs for complex action. It handles rapid movement and intricate sequences better than many competitors, which makes it a solid choice when time-sensitive or motion-heavy content is the priority.

Action sequences, animated shorts that rely on line art aesthetics, and any scene where motion fidelity matters more than photoreal texture.

Luma AI keeps the interface minimal and approachable so non-technical creators can start quickly. The product includes step-by-step guides and tutorials to shorten ramp-up time.

It supports text, image, and video editing workflows and focuses on turning conceptual ideas into finished visuals without steep learning curves.

Solo creators, small teams, and educators who need straightforward tools to produce polished results without deep technical setup.

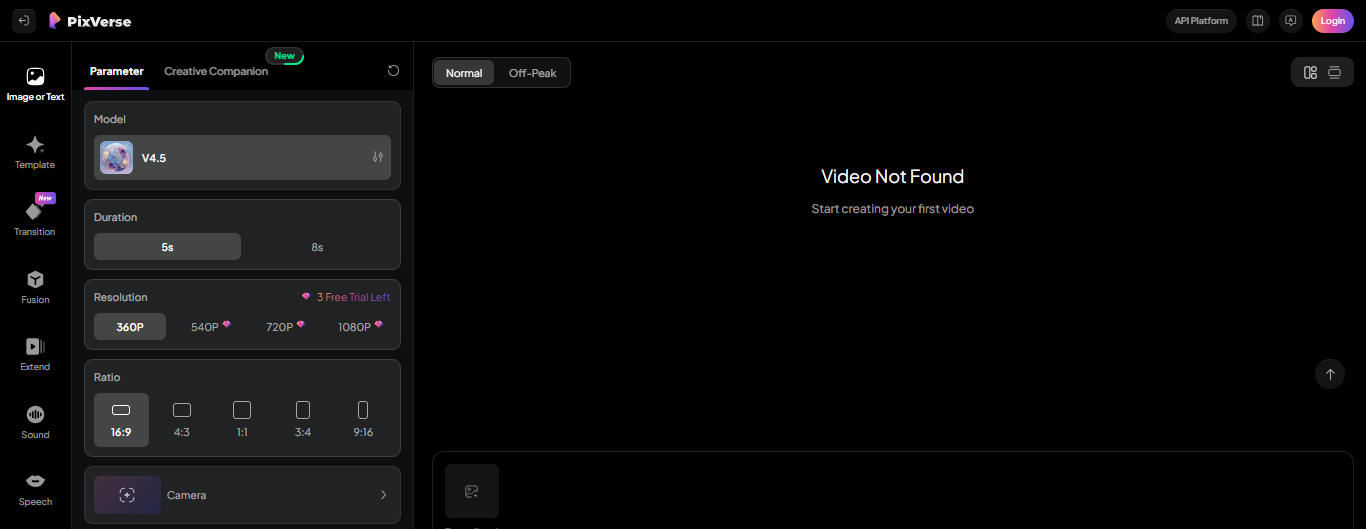

PixVerse turns ordinary photos and clips into AI-enhanced content with real-time effects. Upload a video, choose a style, and apply changes instantly. The app speeds up audio-video production and supports quick restyling for social posts. It aims to democratize professional looks by removing long render cycles.

Social media restyles, meme-ready transformations, and short promotional clips that require fast turnarounds and immediate sharing.

Veo 2 generates high-fidelity video across styles and genres with strong physics and human motion understanding. It gives filmmakers control over genre, lens choices, and visual effects, producing pro-quality shots up to 4K.

The model can interpret cinematic requests like tracking low-angle shots or intimate close-ups while maintaining consistent expression and movement.

Use Veo 2 when you need cinematic realism, precise camera staging, and high-resolution output for commercials or narrative scenes.

Hunyuan Video runs with 13 billion parameters and focuses on open source accessibility. It supports resolutions up to 720 by 1280 and aims to deliver professional-grade results for commercials and artwork.

The model presents strong performance for projects that need a capable open source engine rather than a closed commercial API.

Hunyuan suits teams that require self-hosting or deeper customization while keeping good visual fidelity for web and mobile delivery.

Pika Labs animates still images into video with playful effects like melting, exploding, inflating, and dissolving. It prioritizes speed with high-throughput rendering for instant content creation.

The site supports one-click social sharing, which makes it a fit for creators focused on entertainment and quick engagement.

Create eye-catching posts, short social clips, and experimental visuals that need fast render and easy distribution.

Consider your target resolution, style needs, and whether lip sync and avatar motion are core features for your projects.

Viewer attention drops sharply within the first seconds. Open with a clear signal of value so people know why to stay. Lead with the main point, then layer in supporting detail. Use quick cuts, dynamic text, and visual shifts to keep momentum. Keep most videos under two minutes unless you truly have long-form content worth the extra time.

Apply progressive disclosure: deliver the key message first, add useful context next, and save examples or finer points for later. That way, viewers still leave with the essentials even if they drop off early.

Test multiple AI-generated thumbnails to see which images or faces drive clicks; tools like Pollo AI can generate and predict click-through performance.

Use contrast, pacing, and framing to guide attention. Large, readable text and tight shots work best on small screens. Variety shot sizes and add motion every few seconds, static frames cost you viewers.

Many viewers watch without sound, and global reach demands localisation. Automated captions support accessibility and boost watch time. AI-driven translation turns one master video into many regional assets without costly reshoots.

Add captions or subtitles, generate translated scripts, and apply synthetic voice cloning for a consistent brand narrator. AI-driven colour grading ensures visual consistency across devices and lighting conditions.

Use dynamic text replacement to tailor language and offers by audience segment. AI can also place calls-to-action at the least risky drop-off points. Pollo AI offers multilingual support, personalisation, and voice cloning to help scale without losing brand voice.

Most viewing happens on phones. Use big type, strong contrast, and tight subject framing. Test legibility at thumb size. Make the first three seconds visually clear and relevant without sound.

Produce multiple aspect ratios: square for Facebook, vertical for TikTok and Stories, horizontal for YouTube and LinkedIn. Auto-reframe and reformatting tools (e.g., Pollo AI) can speed this while keeping branding intact.

Hook with on-screen text, expressive visuals, and captions so your video communicates instantly, even on mute.

Focus on watch time, drop-off points, rewatch segments, click-through, and conversions. Analytics dashboards can highlight what’s working and what isn’t.

A/B test openers, thumbnails, voice styles, and CTAs. Compare burned-in captions vs. sidecar files, or translated vs. original language. Use small, frequent updates instead of big overhauls.

Drop-off curves show pacing issues; heat maps and rewatch graphs reveal what connects. Adjust scripts, pacing, or visuals accordingly, and republish quickly with AI editing or workflow tools.

Digital video now reaches over 3.4 billion viewers worldwide. To operate at that scale, AI can turn a single story into many localised, platform-ready versions. Tools such as Pollo AI video platform, SDK, and creator suite help speed repeatable output while keeping brand style consistent.

DomoAI replaces long editing sessions with an AI-driven workflow that anyone can use. It applies generative AI models and computer vision to cut, color, and animate without manual keyframing or mastering complex software.

Like Pollo AI, it blends neural networks, machine learning, and cloud rendering so you spend time on ideas instead of tool mechanics. What would you make with those freed hours?

Upload a photo and DomoAI creates motion with depth cues, parallax, and frame interpolation. The system uses image animation and motion synthesis to generate smooth movement, auto-stabilizes and fills in missing pixels, and keeps motion consistent across shots.

You do not need motion capture rigs or laborious rotoscoping to get cinematic parallax or smooth camera moves.

Style transfer and neural rendering let you convert footage into anime style with controlled color palettes, line work, and shading. The models apply consistent frame-to-frame stylization so characters keep the same look while motion remains fluid.

You can tweak intensity, preserve facial detail for lip sync, or push a more illustrated look for brand content.

DomoAI creates talking avatars by combining avatar creation, facial reenactment, and text-to-speech. Type a script, pick a voice, or use voice cloning, and the avatar lip syncs with natural expressions.

The process uses multimodal AI to map phonemes to mouth shapes and to add microexpressions that match the tone you choose. Want an avatar for an explainer, a course, or a branded channel?

Content creators, social marketers, educators, and small studios get the most immediate value. If you need fast social clips, personalized messages, or stylized short films, the platform handles repetitive tasks like:

Teams also use APIs to integrate DomoAI into publishing workflows and to scale content production across channels.

The editor automates key steps: scene detection, audio alignment, motion smoothing, and export optimization. It uses cloud rendering to avoid local hardware limits and applies safety checks like watermarking options and deepfake detection features.

The models respect copyright rules and offer tools for consent management when creating synthetic avatars and voice clones.

Sign up, upload your assets, choose a template or style, type a prompt, and let the AI generate a draft you can refine. You can replace voices, adjust timing, change styles to anime or realism, and export optimized files for social platforms.

Ready to test a concept with minimal time and no steep learning curve?

Recent articles

© 2026 DOMOAI PTE. LTD.

DomoAI