.jpg)

Table of Content

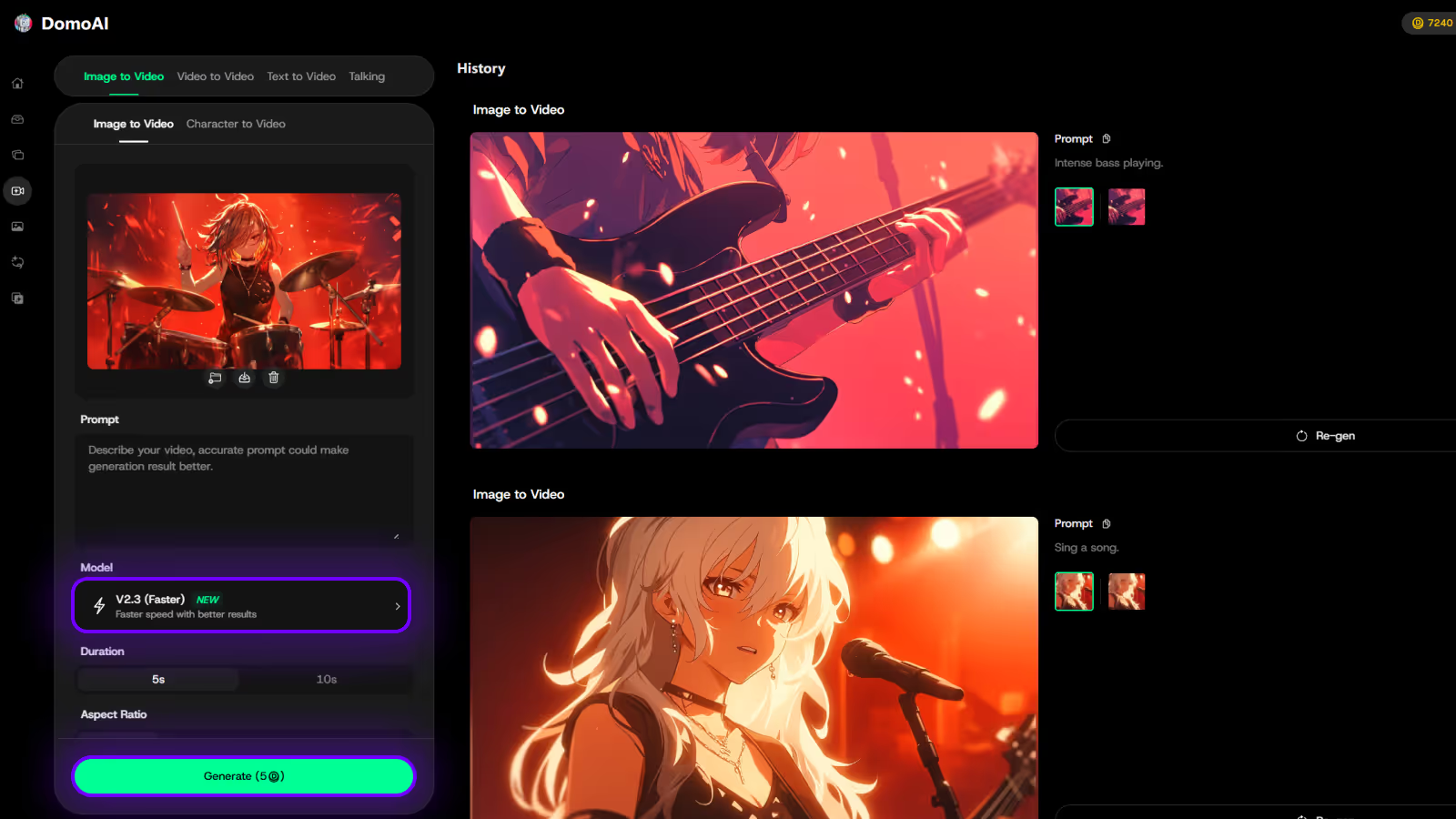

Try DomoAI, the Best AI Animation Generator

Turn any text, image, or video into anime, realistic, or artistic videos. Over 30 unique styles available.

Working inside Veo 3, creators often hit the same snag: tools that promise easy AI video creation but lock you into one workflow. Have you struggled with slow renders, one-size-fits-all templates, or AI edits that strip your intent? This article lays out clear comparisons of Krea AI alternatives for AI video generation and editing, covering generative AI platforms, text-to-video tools, innovative templates, prompt-driven editors, and quick production workflows so you can pick the creative studio that fits your process and goals.

DomoAI's AI video generator offers a simple, fast way to test ideas, make clever edits, and turn scenes into finished clips without a steep learning curve.

Among the most useful tools, DomoAI’s AI Anime Video Generator stands out for creators who want to move fast without losing creative flexibility.

Krea AI focuses on short-form output, which keeps creators to brief clips instead of extended journeys. Storytellers, educators, and marketing teams often need longer run times for narratives, tutorials, or commercials.

That restriction forces people who need multi-minute sequences or chaptered videos to look for alternative AI video generator tools that allow full-length projects. What do you do when your script requires more than a quick clip?

Text to video jobs can take up to nine minutes to complete, and image-to-video jobs can run around three minutes. Those render times add up when you iterate, test prompts, and make minor adjustments.

Creators on tight deadlines or teams managing many projects need faster video rendering and lower latency from their AI video creation platform. Can your workflow tolerate lengthy waits between edits?

Users report clear logical errors in generated clips:

When a character looks anatomically wrong or a simple object moves without reason, the illusion breaks and credibility falls. These visual artifacts come from weaknesses in the generative AI model and harm professional projects. Would you present a clip with obvious anatomical errors to a client?

Generated footage sometimes shows jitter, rough detail, and inconsistent rendering across successive frames. Temporal coherence and frame consistency matter when you want smooth motion or stable character appearance.

Human figures often shift shape, lighting can jump, and small details become noisy from one frame to the next. For creators targeting a polished final edit and broadcast quality, such instability undermines confidence in the tool.

Beyond switching resolution and aspect ratio, Krea AI offers little control over style, pacing, camera behavior, or color grading. Creative professionals expect fine-grain creative controls, from look and mood presets to camera path and edit beats.

Limited customization blocks nuanced direction and prevents matching a brand or artistic intent. How can you deliver a precise vision without deeper style customization and creative controls?

Krea AI sits in a premium pricing tier, but many users find that the quality output and feature set do not justify the subscription or pay-per-render cost. When visuals show inconsistency and length remains capped, the return on investment weakens.

Competitors now offer faster generation, richer creative controls, and more reliable video quality at lower cost. Will your budget support a tool that limits what you can produce?

Several paying users report slow or missing replies from support, sometimes waiting weeks despite a promise of a 24-hour response time. When distorted visuals, rendering errors, or account issues appear, creators need timely help to keep production moving.

Poor help desk performance damages trust in any platform, especially for teams that depend on fast troubleshooting and reliable assistance. Who wants to wait for answers when a deadline is looming?

.jpg)

Look for platforms with strong AI-driven features:

Prefer systems that offer both generative and assistive features so you can create scenes from a short prompt or let the AI help polish timing, color, and pacing. Ask how the engine handles character animation, talking avatars, and animation voice, so you do not hit limits when you scale a concept.

Choose a tool with an extensive template library, flexible editing controls, and effortless style transfer so every video aligns with your brand voice. Check for scene-level controls, custom fonts and color palettes, and the ability to import your own assets and logos into the asset library. Can you save branded templates and apply them across campaigns to keep visual consistency?

Prioritise platforms that create multi-angle perspectives, photoreal motion, and realistic animations from prompts or images. Look for tools that generate dynamic scene variations, camera moves, and natural transitions so your content outperforms basic cut-and-paste edits.

Does the solution support animations, talking avatars, and style options like anime or cinematic looks without manual rotoscoping?

Find workflow features that speed production:

Check for API integration to plug the generator into your publishing or ad systems and for export presets tuned to social platforms. How fast can you produce ten videos with the same template and different copy?

Demand high-resolution exports, at least 1080p and preferably 4K, with smooth motion and clean artifacts. Verify sample outputs for motion realism, audio sync, and natural lip sync on talking avatars, and confirm support for royalty-free stock footage and transparent backgrounds where needed. Will those final files play cleanly on mobile, desktop, and connected TV?

Confirm API and plugin support so the tool fits into your asset management, production, and publishing stack. Review permission controls, content ownership, and licensing for generated assets and third-party clips. What safeguards exist for sensitive content, and how easy is single sign-on for your team?

Compare pricing for commercial use, export limits, and additional costs for custom models or premium assets. Look for responsive support, active developer docs, and an engaged user community that shares templates and prompts. Can you test it on a pilot project before committing?

Try small experiments with a shortlist of platforms and measure time saved, quality gains, and how well the output matches your creative briefs. Which tool lets your team iterate fastest while keeping complete control over brand and rights?

Creating cool videos used to mean hours of editing and lots of technical know-how, but DomoAI's AI video editor changes that completely: you can turn photos into moving clips, make videos look like anime, or create talking avatars just by typing what you want.

It’s built so anyone can make engaging content without learning complex tooling, so you focus on the idea and the AI handles the technical work. Create your first video for free with DomoAI today!

DomoAI removes the need for advanced editing skills or complex video software. Describe what you want, and the AI generates animated clips, anime-style videos, or talking avatars. That lets creators focus on storytelling, character work, and visual experimentation rather than technical production.

Synthesia converts scripts into high-quality video in minutes. It supplies lifelike AI avatars and natural voiceovers across more than 140 languages so teams can scale training, marketing, and sales content without studio shoots.

Sora creates realistic one-minute videos from text prompts while keeping motion accurate and scenes coherent. It uses transformer-based diffusion models that predict motion and fill in missing frames for smooth results.

InVideo AI turns a typed idea into a complete video by letting you set length, target platform, and voiceover style. It pairs text-to-video generation with a stock media library and collaborative features for teams.

Good fit for marketers and small studios that need quick, specific videos with consistent voice and visuals.

Canva combines templates and AI design tools to produce graphics, slides, and videos. Its Magic Studio tools help generate images, edit video, and apply consistent branding across media assets.

Best for teams that need simple video editing tied into broader content production and brand systems.

Veed provides an AI-based editor that speeds up routine edits and production tasks. Features like automatic subtitles, text-to-video conversion, and voice cloning make it worthwhile for marketing, education, and sales content.

Capsule targets enterprise production with motion design systems that let employees create on-brand videos quickly. It converts motion graphics from After Effects into responsive templates so non-editors can assemble polished content.

Peech automates high-volume video production, repurposing, and localization. It creates many variations from a single source, inserts brand elements, and generates subtitles and translations for international reach.

Great for teams that publish many variants of the same core material across platforms and languages.

Hailuo AI focuses on automating video production, editing, and customization using deep learning models. It offers templates and neural enhancements for motion and voice realism.

Descript treats audio and video as editable text. Remove filler words, correct audio, and reshape videos without complex timelines. It adds AI features that simplify the production of podcasts and video episodes.

Pictory converts blog posts, transcripts, slides, or audio into short, engaging videos. It automates summaries, captions, and uses stock media to turn text into social-ready clips.

Works well for editorial teams, bloggers, and course creators who want fast video versions of existing content for social platforms.

Runway ML brings accessible machine learning tools to video editing. It supports text to video, generative audio, and selective motion tools so editors can test creative variations without a full VFX setup.

Who benefits: visual artists, post-production teams, and creators exploring experimental generative AI workflows.

Vidyo.ai focuses on video repurposing for social platforms. Its AI finds scenes, applies transitions, and reframes multi-camera footage to create bite-sized clips that perform across channels.

Ideal for creators and marketers aiming to extract shareable moments from recorded webinars, podcasts, and long-form video

.jpg)

Artificial intelligence (AI) and machine learning are revolutionizing many industries, including video production. This shift has created remarkable efficiency gains, with businesses reporting up to 80% reductions in production times and costs through advanced video automation.

These efficiencies allow brands to produce more content that drives sales. Whether you use video as a marketing tool or are interested in creating videos to enhance your website and social media, here’s how AI transforms how videos are made.

One of the most time-consuming parts of video production is editing the raw footage together. AI tools are now emerging that can automate parts of the editing process by analyzing footage and making edits automatically.

For example, tools can scan videos to find the best shots, trim clips to optimal lengths, and suggest creative transitions between scenes. Tools like LTX Studio, Clipify.ai, and Luma AI can even turn flat images into video clips.

This allows video creators to focus more on the creative aspects of storytelling and less on technical editing tasks. The automated editing capabilities of AI can help both amateur and professional video creators by making the editing process faster and more efficient.

Adding visual effects to videos has traditionally taken a lot of manual effort and expertise. However, AI algorithms are getting much better at generating realistic visual effects like fire, smoke, explosions, etc, automatically.

Video makers can indicate where they want an effect to appear, and AI handles creating photorealistic impacts that would have taken hours or days previously. This expands the visual possibilities for all video creators. Even indie filmmakers now have access to Hollywood-level visual effects through AI tools.

It can be tedious to watch hours of raw video footage to find the most relevant or interesting clips. AI solutions are emerging that can analyze long videos and automatically create short summaries by extracting the most informative or representative segments.

This “video summarization” functionality lets creators quickly get the gist of long videos. It saves a tremendous amount of time reviewing footage. Summarization can also be used to create teasers or trailers by identifying the most compelling parts of a complete video.

AI is enabling more interactive video experiences. For instance, tools are being developed that automatically generate tags and a visual outline for a video based on analyzing the visual and audio contents.

Viewers can then click on tags to jump to specific topics within the video or get personalized video recommendations. This makes videos more engaging and educational. Interactive elements keep viewers more actively involved and lead to better video engagement metrics.

As the amount of video content grows exponentially, finding relevant clips and sequences within large video libraries is becoming increasingly challenging. AI algorithms are getting much better at analyzing video content and automatically tagging videos to optimize search and discovery.

This allows creators to search their own media libraries easily and helps viewers find videos relevant to their interests. Accurate auto-tagging makes it easier for videos to be seen by search engines as well. This improves the discoverability of videos, enabling them to reach a wider audience.

AI is having a profound impact on video production by automating time-consuming tasks, enabling complex effects, summarizing/interacting with video, and improving search and discovery. While human creativity is still essential, AI is dramatically improving productivity, increasing quality, and enabling new video formats and experiences.

DomoAI gives you an AI video editor that shifts the work from tools to ideas. Upload photos, type a description, pick a style, and the platform generates moving clips. It uses image generation and motion models to add camera moves and animated elements, so you do not need long or complex editing timelines.

Want to animate a vacation photo or a product shot? DomoAI applies motion mapping and frame interpolation to create fluid movement from still images. The process uses prompt-driven controls similar to Krea AI prompt libraries so that you can dial in style, lighting, and mood without manual keyframing.

Want a cinematic look or anime styling? Choose from preset styles or enter an art direction prompt. DomoAI uses generative art models and style transfer techniques related to diffusion model workflows. You get consistent color grading, line treatment, or cel shading without deep model tuning.

Create a talking avatar by uploading a photo and typing dialogue. The editor aligns lip sync, head motion, and voice to your script. This uses facial landmark tracking and synthetic voice models, mirroring the quick prototype flow seen in AI creative studios like Krea AI where prompt engineering and iteration speed matter.

Are you a social creator, marketer, or product designer? DomoAI targets people who want quality videos fast. It supports creators who use prompt-based asset generation, collaborative workflows, and template-driven production. No need to learn advanced timelines or render pipelines.

The platform automates masking, background replacement, and motion smoothing. It integrates image upscaling and model-based denoising so assets look clean on phones and large screens. That reduces manual tracking, rotoscoping, and repeated render passes.

Use templates and an asset library to keep brand consistency. You can save presets like voice

profiles, color profiles, and animation styles. Those assets plug into a prompt library and version history, similar to collaborative features found in creative model hubs and asset-driven platforms.

DomoAI plays well with standard generative tools. Export frames for further editing in image generation services, or use the files with vector or sketch to image pipelines. API support and standard codecs let you insert generated clips into broader creative systems built around diffusion models and prompt iteration.

You can create your first video for free. The free tier lets you test motion effects, anime styles, and talking avatars. Paid plans add higher resolution exports, priority generation, and team seats for shared libraries and control over model variations.

Try short descriptive prompts that mention mood, camera angle, and art style: reference lighting and texture words used in the image generation communities for more precise results. Combine the platform templates with prompt iterations to refine motion and voice timing.

Want to try a quick experiment? Upload a headshot, type one sentence of dialogue, choose an anime style, and run a free render to see how the system aligns animation and voice.

Recent articles

© 2026 DOMOAI PTE. LTD.

DomoAI