Table of Content

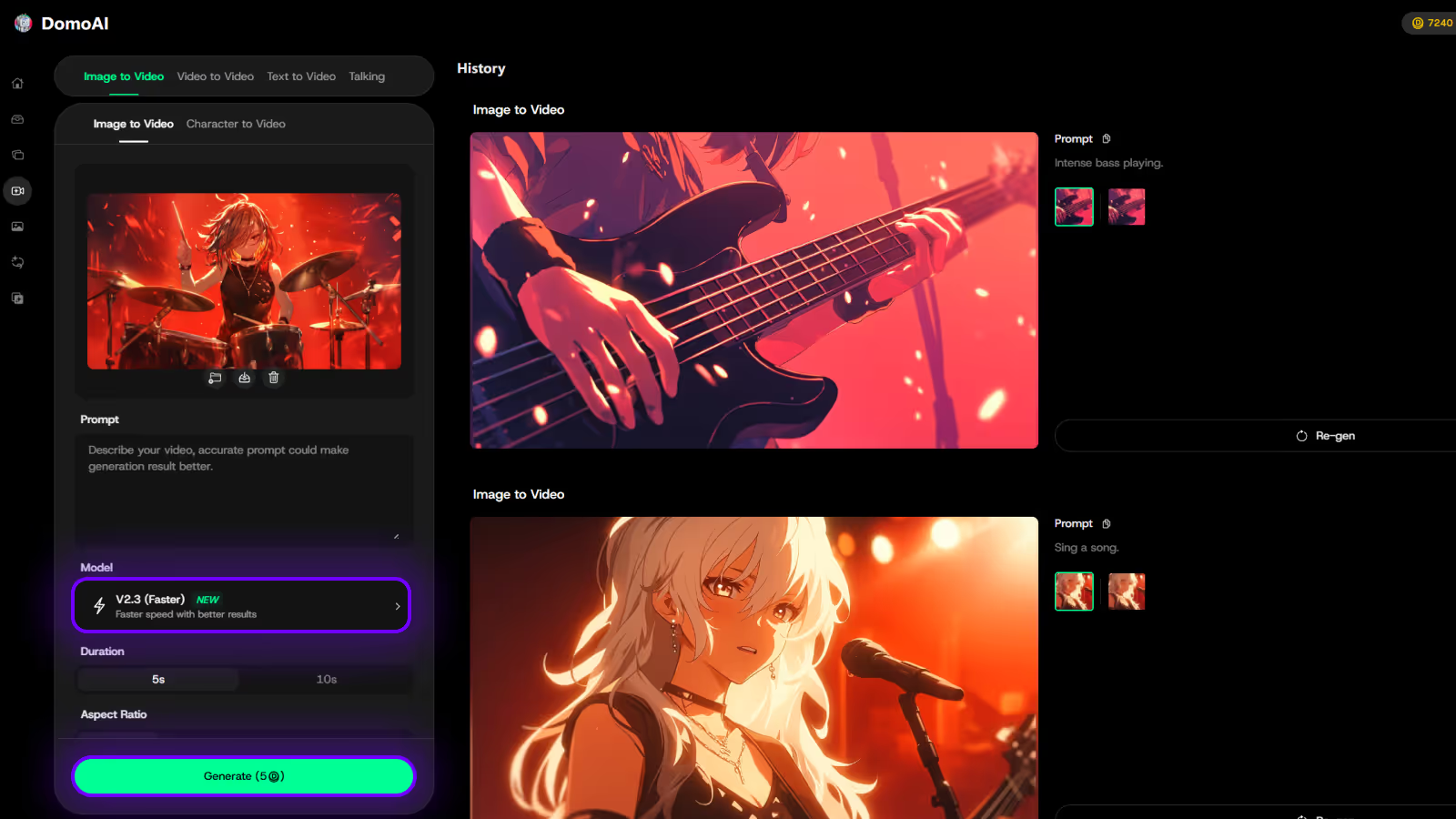

Try DomoAI, the Best AI Animation Generator

Turn any text, image, or video into anime, realistic, or artistic videos. Over 30 unique styles available.

When you bring your projects into Veo 3, choosing the right AI tools for video can feel like swapping lenses on a camera: one choice sharpens motion cuts, another smooths audio, and a third builds synthetic scenes. Many creators face the same call between using Higgsfield AI for automated edits and exploring other options that promise faster content creation, better scene detection, and cleaner post-production. This article maps the best Higgsfield AI alternatives for AI videos and editing, comparing generative AI video generators, neural network-based enhancement, automated captions, video templates, cloud editing, API and SDK support, and simple workflows to help you decide. Which features matter most to your workflow, speed, or final look? AI video generator steps in with easy templates, automated scene cuts, captioning, and simple controls so you can test alternatives to Higgsfield AI inside Veo 3 and produce polished clips without a steep learning curve.

To take it further, DomoAI's AI Video upscaler offers a practical way to push quality beyond what Higgsfield AI typically delivers. Instead of spending extra hours cleaning noise, balancing colors, or sharpening footage in a separate editor, this tool automates those improvements directly in the workflow.

Users expect generative AI tools to accept their source files. Higgsfield AI focuses on converting existing images into motion, but it does not let creators upload original assets to generate visuals from scratch. That limits creative control for photographers, designers, and filmmakers who want to start from a concept, raw photo, or layered PSD.

Competitors that support image uploads, text to image, and mixed input let you iterate from a phone photo or a concept sketch into a finished clip, which makes them more appealing for real-world projects. Want to turn a phone photo into a cinematic sequence? With Higgsfield, you face extra steps and constraints.

The editing suite on Higgsfield AI focuses on the core transform but offers few fine-tuning options. Professionals look for:

They also want control over output resolution, codec choice, and noise handling when working with diffusion models or neural network-driven motion.

Without those features, creators must move finished clips into another NLE for fixes, which adds time and friction to the workflow. Do you prefer a single platform that handles both generation and precise post-production or several tools stitched together?

Higgsfield AI centers on image-to-video conversion, not on a broader suite of creative AI. Many teams want text-to-image, text-to-video, style transfer, face animation, or rigged character export in a single platform. They also wish to APIs, batch processing, and project templates that scale across shoots or campaigns.

Platforms that combine multimodal generation, animation tools, and plugin support reduce handoffs and speed iteration. If your project needs generative AI integration across concept art, motion tests, and final renders, a single-purpose tool can feel too limited.

Several user reports note problems with billing defaults and refund handling. Moderators on the Higgsfield Discord have acknowledged that annual plans appear as the default, and some customers say they bought yearly subscriptions by accident. The stated refund policy covers unused credits and a seven-day window, but multiple Trustpilot reviews claim refund requests were denied or ignored.

Poor ticket response, unclear subscription terms, and inconsistent customer service increase user risk when adopting a creative AI service. Would you commit to a subscription for production work if support and billing practices could cost time or money?

Pick a platform that gives you multiple creation modes beyond just image-to-video conversion. Check for text-to-image, text-to-video, direct timeline editing, template-driven sequences, and prompt controls that let you dial in style and motion.

Ask whether you can train or import custom models and whether the tool supports batch jobs and storyboard workflows for longer projects. Expect:

You should be able to upload photos, raw video, and audio files, and then manipulate them intensely. Look for masking, layering, blending modes, keyframe animation, chroma key, color grading, and accurate frame-by-frame control rather than crude trimming.

The best alternatives accept wide file formats, let you store assets in the cloud or locally, and keep high bit depth so color and detail survive the edit. Check for versioning, team libraries, and export-ready media for post-production tools.

Not every creator needs a premium subscription from day one. Look for platforms that offer meaningful free tiers with actual creation capabilities, not just previews or watermarked outputs. DomoAI lets you create your first video completely free, while tools like Pictory and InVideo provide limited but functional access to their core features.

When comparing costs, calculate the real price per finished minute of video rather than just monthly fees. Some tools charge per render, others per export, and a few bundle everything into unlimited plans. Factor in resolution limits, watermarks, and commercial usage rights when evaluating free alternatives.

Open-source alternatives give you full control over the code, data processing, and model training. While most commercial AI video generators keep their models proprietary, some creators prefer tools they can modify, self-host, or integrate into custom pipelines without vendor lock-in.

Consider whether you need the flexibility to train custom models, process sensitive content locally, or build the video generation into your own applications. Open-source options typically require more technical setup but offer unlimited customization and no recurring subscription costs.

Some creators want powerful results without learning complex interfaces or prompt engineering. User-friendly alternatives prioritize intuitive workflows, clear visual feedback, and helpful guidance over raw customization options.

Look for platforms with drag-and-drop interfaces, preset templates, and built-in tutorials. DomoAI emphasizes simplicity by letting you upload a photo, type a description, and get polished results without wrestling with technical settings. The best beginner-friendly tools hide complexity while still producing professional-quality output.

Review pricing and billing details before you commit so you avoid surprises common in some Higgsfield AI experiences. Look for:

Confirm cancellation steps, trial limits, and whether enterprise credits or prepaid plans are refundable under specific conditions.

Support matters when a render stalls or a charge posts incorrectly. Prefer vendors with email ticketing, live chat, and active community forums with moderated answers and searchable knowledge base articles.

Check published service level expectations for response time on billing and technical issues, and whether enterprise accounts get a dedicated manager. Also, test how the team handles policy questions like deepfake safeguards and content moderation.

Test output quality on your content: resolution, frame rate, temporal consistency, and facial stabilization are all measurable. Check for realistic animations, clean lip sync when generating talking avatars, and artifact reduction across long clips.

Measure render times on both preview and final export, and confirm:

Also, evaluate safety controls such as consent checks, watermarking, and model governance to avoid misuse.

A strong tool plugs into the systems you already use, from Adobe Premiere and After Effects to Zapier, webhooks, and a usable API. Look for export options like MP4, MOV, and WebM, project interchange formats, XML or EDL support, and presets for social platforms with correct aspect ratios and codecs.

Team permissions, shared libraries, and automation hooks make the platform valuable beyond single projects and reduce friction when moving work into post-production.

Creating cool videos used to mean hours of editing and lots of technical know-how. Still, DomoAI's AI video generator changes that completely by letting you turn photos into moving clips, make videos look like anime, or create talking avatars just by typing what you want.

It handles the complicated parts so you focus on ideas and content; create your first video for free with DomoAI today!

DomoAI converts a photo and a short prompt into a moving clip, an anime sequence, or a talking avatar. Upload an image, write what you want, and the generative AI creates motion, lip sync, and stylization from that input. This workflow removes the need to learn complex editing software and keeps the focus on storytelling and visual direction.

Creators making short-form social posts, marketers testing creative concepts, and teams that need quick avatar or clip generation. The platform prioritizes accessibility and speed rather than deep VFX control.

DomoAI lets you create your first video for free so you can evaluate the prompt-to-video quality without spending up front. Try a simple prompt and test how it handles motion, facial animation, and stylized render passes.

Runway's Gen 3 model produces high-quality, photoreal, and stylized video from text and multimodal prompts. You can adjust aspect ratio, resolution, and stylistic parameters while combining Gen 3 with Runway's editing tools like Motion Brush and Camera Controls.

These integrations let you refine motion paths, isolate objects, and apply neural rendering into a conventional editing timeline.

Editors and filmmakers who want generative AI combined with more hands-on VFX and compositing. It supports motion synthesis and advanced camera effects for production workflows.

Runway exports standard codecs and preserves custom camera and motion metadata so you can finish projects in NLEs or use cloud-based publishing directly.

Pictory accelerates repurposing long-form text, articles, or scripts into short videos. Recent updates improved:

The voiceover engine was rolled back to a prior version that many users find more natural, and Brand Kits let you lock fonts, colors, and logos for consistent output across projects.

Social media managers, training teams, and creators working across languages since Pictory supports video creation in 29 languages with AI voices. The tool also adds convenience features like bulk scene deletion and new style presets to speed editing.

Pictory outputs platform-ready formats and supports team accounts for shared Brand Kits and review cycles.

/image

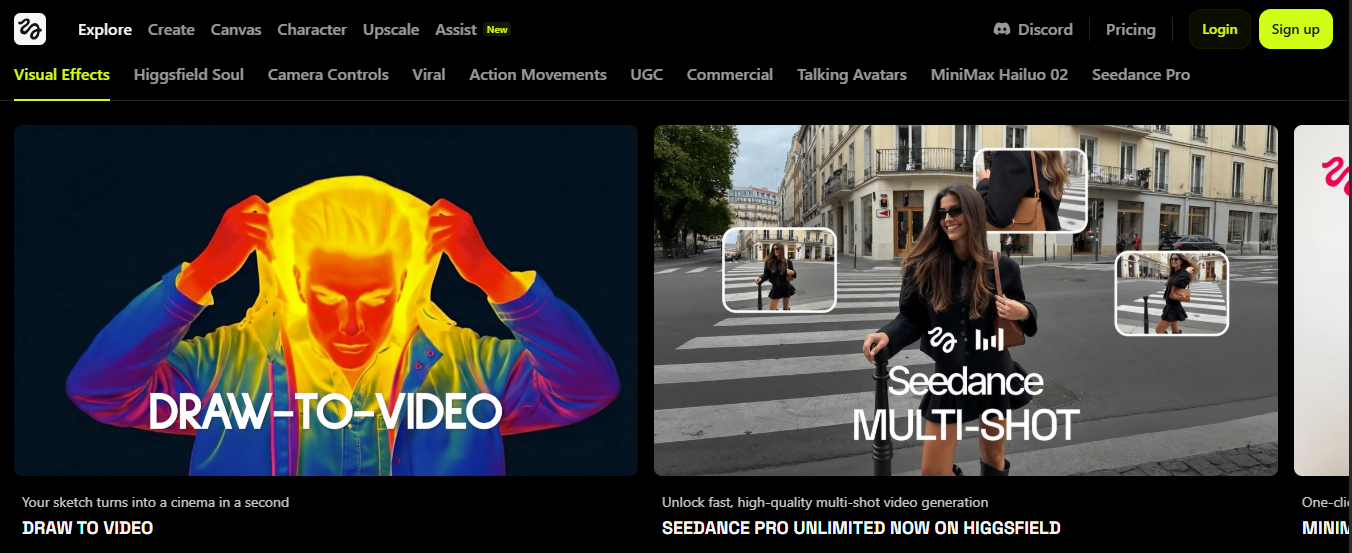

Basedlabs focuses on workflow efficiency. AI scene detection finds key moments and cuts, reducing manual trimming time. The platform supports simultaneous editing so teams can annotate, comment, and update projects in real time while avoiding version control problems.

Templates are customizable, so you can produce professional content quickly and export it in multiple formats optimized for different platforms.

Production teams that need repeatable templates, fast turnaround, and reliable scene analysis for long footage. It also supports motion metadata export and format presets for:

Basedlabs connects with standard cloud storage and allows multi-format exports to reduce additional conversion steps.

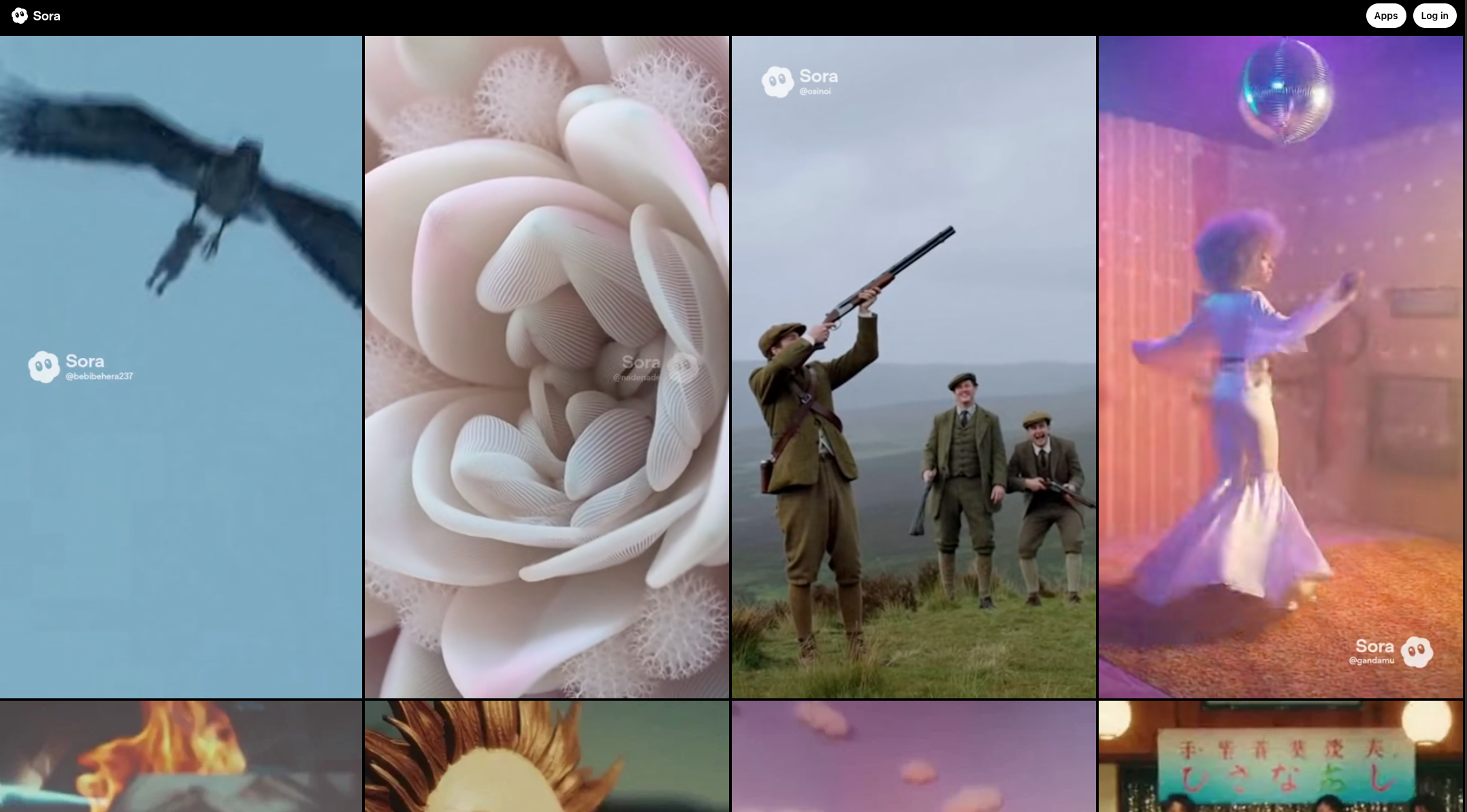

Sora focuses on text-based generation that handles complex scenarios such as detailed environments and character movements. Write a sentence like "a giant duck walks through the streets in Boston," and Sora will build a sequence with scene composition, lighting, and action. The engine is tuned for fidelity so motion, cloth, and interactions read more naturally.

Concept artists, storytellers, and teams create proof of concept sequences where realism of behavior and environment matters. Sora gives you finer control over action descriptions and temporal continuity than many text-only generators.

It may require iterative prompting to reach exact choreography and photoreal detail, and higher fidelity outputs typically consume more generation credits.

Luma AI excels at turning real-world captures into 3D reconstructions and then producing camera flythroughs or stylized renders. The engine uses neural radiance fields and neural rendering techniques to synthesize novel views and realistic lighting from multi-angle photos or video.

This makes it a powerful choice for product visualization, architectural walkthroughs, and immersive content for AR experiences.

Creators needing volumetric outputs, 3D scene reconstruction, or textured model exports to downstream tools. Luma supports photogrammetry-style inputs and offers tools to clean and optimize models for rendering.

Outputs include textured meshes, point clouds, and rendered sequences for distribution that you can feed into:

Pika Labs turns short prompts into animated clips quickly and adds animation controls for static images. Its cloud-based platform lets you iterate without heavy local hardware and supports multilingual creation for global content.

The AI animation toolkit allows timeline tweaks and keyframe style adjustments so you can fine-tune motion after generation.

Social creators, rapid prototypers, and teams that need a responsive cloud workflow with adjustable animation controls. The platform prioritizes speed with enough power to refine the final output.

Projects live in the cloud and export to standard codecs or sequence frames for further VFX work.

Assistive blends AI script writing with an intelligent editing assistant that analyzes footage and suggests cuts, pacing changes, and transition points. It also supports voice cloning to create personalized voiceovers and team collaboration for feedback and version tracking. The system works as a creative assistant that reduces initial writer's block and surfaces technical edit suggestions.

Marketing teams and creators who need both content generation and guided editing. The tool speeds pre-production and editing while providing voice assets that match the brand tone.

Assistive runs in the cloud and integrates with standard media storage, letting teams share assets and track approvals in one place.

Pixverse provides granular controls for animation, shaders, and particle effects. Power users can tweak to craft:

The platform is accessible via web and a Discord interface, which makes it convenient for communities and fast iteration, though the feature set can feel dense for new users.

Experienced animators and studios want precise control over every render parameter and custom pipeline hooks. The system produces frames suitable for VFX finishing and compositing.

Expect a learning curve if you want to push the platform toward complex production goals, and plan to use tutorials or community guides to speed adoption.

InVideo focuses on simplicity and speed. It offers an extensive library of professionally designed templates ready for customization, plus media assets such as images and royalty-free music.

The editor provides filters, animated text, overlays, and a real-time preview so you can craft social clips and marketing videos without deep editing skills.

Marketers, small business owners, and creators who want fast results with polished templates and platform optimization tools for YouTube and social channels. It also helps with metadata like titles and descriptions for distribution.

InVideo supports animated overlays, interactive elements, and export presets that match platform aspect ratios and size limits.

Hailuo AI blends automated enhancement with text-to-video generation. The enhancement engine sharpens visuals and improves audio quality automatically, while the text-to-video system transforms short prompts into complete clips. The platform supports more than 30 languages and offers customizable templates for branded outputs.

Creators and businesses that need language coverage, automated quality improvements, and fast templated production. Its cloud native setup lets teams access projects from any location and continue work without local dependencies.

Hailuo's enhancement pipeline tackles denoising, color grading, and audio leveling before export, so the generated footage arrives closer to publish-ready.

Higgsfield AI excels at image-to-video conversion but falls short on flexibility and editing depth. DomoAI offers similar simplicity with broader input options and style transformation. Runway provides professional-grade tools that Higgsfield lacks, while Pictory focuses on script-to-video workflows that Higgsfield doesn't support.

Most alternatives surpass Higgsfield in upload flexibility, with platforms like Luma AI handling 3D reconstruction and Sora managing complex scene generation from text alone. The key difference lies in scope: Higgsfield serves one specific workflow, while these alternatives offer comprehensive creative suites.

Free options like DomoAI's starter tier give you real creation capabilities, but advanced features require paid plans. Open-source alternatives provide complete control but demand technical expertise. User-friendly platforms prioritize ease over customization, while professional tools offer depth at the cost of complexity.

Consider your primary use case: quick social content creation favors simple tools like InVideo, while film production benefits from Runway's advanced controls. Budget-conscious creators can start with free tiers and upgrade based on results, while teams needing consistent output should evaluate collaboration features and brand management tools.

AI has transformed video production from a high-cost, specialist task into something scalable and accessible for everyday brand storytelling. For digital-first brands, this shift means video is no longer an occasional campaign asset; it's an ongoing language for engaging audiences, with over 3.48 billion people watching digital video worldwide.

To get the most out of AI video creation, consider these best practices:

Start with the value. Put the main point in the first three seconds and keep the pace brisk. Video engagement falls sharply after the first minute and drops fastest in those first seconds. Which element will stop your audience before they scroll? Use that element first.

Then structure the script with progressive disclosure: lead with the core message, add supporting facts, and finish with secondary detail. Progressive disclosure ensures your key message lands even if viewers do not watch the whole clip.

Design for visual variety. Use scene changes, dynamic on-screen text, motion, and contrasting shots to hold attention. Keep text large and readable on small screens. Tight framing helps focus the eye on faces and product details.

Most branded clips should stay under two minutes unless you have content that truly demands more time.

Test thumbnails and openings. AI-generated thumbnails from Higgsfield AI and similar tools can produce multiple options and predict which visuals lift click-through rates. Try several thumbnails, then let the platform surface the best performer.

Use A/B testing to compare openings and lead images so you stop guessing and start optimizing.

Add automated captions and translations to reach more people. Captions help viewers who are deaf or hard of hearing and improve comprehension in noisy environments. AI-powered translation and subtitle tools can produce multilingual versions quickly, extending reach with minimal extra work. Higgsfield AI-style tools often handle both captions and language localization at scale.

Keep voice and brand consistent. Use voice cloning and reusable AI-generated voice assets to create a stable presenter across many videos. Use AI-driven color grading to keep your visuals consistent across phones and monitors.

Try dynamic text replacement to tailor on-screen copy by source or audience segment. Those personalization features work best when paired with a naming and asset strategy so variants remain organized.

Let AI suggest CTA timing. Platforms with engagement analytics can point to ideal spots for calls to action so you increase conversions without disrupting the user experience. Use that insight to place CTAs where viewers are most receptive.

Most viewers watch on phones in distracting settings. Prioritize large, readable text, clear visual hierarchy, and tight shots on key elements. Frame human faces and products to remain clear in vertical or square crops.

Which format matches each channel?

Create platform-specific versions rather than stretching one master clip into every feed.

Use AI to reframe and repitch. Higgsfield AI and other generative video tools can reframe scenes, change pacing, and adjust captions automatically to match platform best practices without starting from scratch. Preserve your core message and branding while tailoring visuals and timing to each platform.

Craft the first three seconds for sound off. Open with clear visual cues that signal relevance fast. Show the problem, the product, or the promise in an image and short text so the viewer understands why to keep watching even without audio.

Gather detailed metrics. Track watch time, drop off points, and conversion rates to see what works. Use analytics in Higgsfield AI or comparable platforms to pinpoint where viewers leave and where they act. Ask which scenes correlate with conversions and which cause drop-off.

Run experiments and refine assets. Use A/B testing on thumbnails, openings, and CTAs. Make minor edits in the AI-driven editor and redeploy variants quickly. Many tools now offer studio-quality editing with simpler interfaces so teams can iterate faster without a steep learning curve.

Create a feedback loop. Use data to shape scripts, pacing, and visuals for the next round. Combine quantitative metrics with qualitative feedback from real viewers to spot friction that numbers alone miss. Over time, the process reduces wasted spend and raises the return on your AI video investment.

Higgsfield AI is a tool that focuses primarily on converting images into video using AI. People seek alternatives because it lacks file upload capabilities for original assets, offers limited editing features, focuses on a single functionality (image-to-video), and has reported issues with customer support and billing practices. Many creators need more comprehensive tools that handle multiple creation modes, provide professional editing controls, and offer reliable customer service.

DomoAI is ideal for beginners. DomoAI offers a simple interface where you can create your first video for free by uploading a photo and typing a prompt—the AI handles motion, lip sync, and stylization automatically. This platforms prioritize ease of use over technical complexity, making them perfect starting points for creators new to AI video generation.

Yes, several alternatives offer free tiers or trials. DomoAI lets you create your first video for free to test its features. Runway, Pika Labs, and Pictory typically offer trial credits or limited free access. However, free tiers usually come with restrictions on video length, resolution, watermarks, or the number of monthly generations. For professional, commercial use at scale, most platforms require paid subscriptions.

Runway stands out for professional workflows, offering Gen 3 video generation combined with advanced editing tools like Motion Brush and Camera Controls. It integrates neural rendering with conventional timeline editing and exports standard codecs with metadata for finishing in professional NLEs. Pixverse is another strong choice for studios needing granular control over animation, shaders, particle effects, and motion curves, though it has a steeper learning curve.

Generation times vary widely based on video length, resolution, and complexity. Simple image-to-video conversions with DomoAI or Pika Labs take 30 seconds to a few minutes. Complex text-to-video sequences with Sora or detailed 3D reconstructions with Luma AI can take 5-20 minutes per clip. Several platforms support batch processing or queued renders—Basedlabs, Runway, and Assistive offer this for production workflows. Check each platform's documentation for specific render times and batch capabilities.

Usage rights vary significantly by platform. Most tools grant commercial usage rights to generated content on paid plans, but retain certain platform rights. Some platforms watermark free-tier outputs. If you're using voice cloning, uploaded photos of people, or branded materials, ensure you have appropriate consent and licensing. Always review each platform's terms of service regarding intellectual property, especially for commercial projects. For sensitive or high-stakes commercial use, consult with legal counsel about your specific rights.

This depends on your use case. For social media content creators who need speed and volume, prioritize ease of use and template availability (DomoAI, InVideo, Pictory). For filmmakers and VFX artists requiring precise control and high fidelity, prioritize generation quality and advanced editing features (Runway, Pixverse, Luma AI). For teams handling diverse projects, look for balanced platforms with good quality, reasonable learning curves, and collaboration features (Basedlabs, Assistive). Test multiple platforms with your actual content before committing—most offer free trials or starter credits.

Sign up, upload a photo or short clip, pick a style, type the script or description, and render. The free tier lets you experiment with features before scaling to higher resolution exports or commercial licenses.

Want a quick sample project to test motion synthesis and avatar quality with Higgsfield AI-influenced models? Create your first video for free with DomoAI and see how the tools fit your workflow.

Recent articles

© 2026 DOMOAI PTE. LTD.

DomoAI