.jpg)

If you shoot with a Veo 3 camera, you face hours of footage that must be trimmed, synced, and polished before it reaches players or clients. LTX Studio promises AI video editing, automatic transcription, and motion templates, yet many creators still wrestle with slow renders, clumsy timelines, or weak collaboration. Want a more straightforward way to compare options and find tools that save time and respect your workflow? This article walks you through the best LTX Studio alternatives for AI videos and editing, highlighting features like automatic cut, auto subtitles, scene detection, cloud collaboration, color grading, and export formats so you can pick the right fit.

To help you test real alternatives, DomoAI's AI video editor offers automated cuts, witty captions, template-based motion graphics, and cloud-based collaboration so you can move from raw footage to polished video faster.

Many users want transparency in how LTX Studio turns scenes and keyframes into an AI-generated script. Seeing the complete compiled prompt helps creators predict output, tune style tokens, and avoid surprises like an “animated” scene rendering in anime style instead of HDR.

Exporting or showing that text also makes debugging faster, supports reproducible results, and lets teams version control changes to narrative prompts. Would you rather tweak text than re-render entire shots to test one word?

Voice direction and mouth movement drive believability in character work. Creators ask for phoneme-level timing, finer control over intonation, and more effortless layering of multiple voice tracks in a single scene.

They also want integrated voice cloning, better language handling, and visual cues for where audio lines will hit a keyframe. When lips and audio fall out of sync, that gap becomes the first thing the audience notices.

Many editors need a valid shot hold option so a single frame can play longer without adding dummy keyframes or freezing layers. A native hold feature would reduce timeline clutter and keep camera motion curves intact.

That saves time on trimming and fixes odd interpolation around a pause. How often do you restructure a scene just to stretch one beat?

High-quality renders in LTX Studio can be computationally intensive for long projects. Creators look for quicker local previews, proxy rendering, or batch GPU export to speed iteration.

A mixed cloud and local render model or a render queue with priority settings would shorten feedback loops and reduce cloud costs. Faster previews let creative decisions happen in minutes, not hours.

Some teams require offline operation for confidentiality or to use on-site GPU farms. Local rendering also reduces dependence on internet speed and cloud credits.

An option to run LTX Studio workflows on-premises, with local asset caching and export, appeals to:

Do you need everything to stay behind your firewall?

A larger built-in stock asset catalog and more free clips would speed production for creators who cannot buy high-priced footage. Better asset tagging, version control, and license metadata inside the project would improve reuse across episodic work.

Integrated templates and scene presets that ship with royalty-free assets would cut prep time.

LTX Studio balances ease of use with advanced tools, but camera controls, advanced keyframing, and some voice features still carry a learning curve. Creators ask for step-by-step tutorials, more in-app tips, and example projects that show complex setups from start to finish.

More precise documentation reduces friction for teams trying to scale workflows.

Teams expect flexible exports for post-production:

API access, SDKs, and integration with Premiere or Final Cut speed handoffs to editors and sound designers. Pricing tiers and collaborative project sharing also shape which tool fits a studio pipeline.

Users praise LTX Studio for storyboarding, strong character persistence, intuitive UI, and cinematic output. That respect fuels curiosity about other tools that might add a missing feature or speed up one part of the workflow.

Which specific gap matters most to your projects, and do you want recommendations for alternatives that match those needs?

Ask whether the platform covers:

Check for easy remote collaboration, low-latency output, and strong support for chroma key or virtual set replacements. Do you need automated clipping for social clips or quick repurposing of recorded events?

Choose platforms with robust AI-driven features such as text-to-video generation, automatic transcript-to-highlights, and AI-assisted scene creation. These tools should support automatic camera framing, speaker detection, and clever editing that turn raw footage into polished segments without long manual passes.

Want to test how fast you can turn a recorded event into short social reels or highlight clips?

Look for extensive template libraries, motion graphics packs, and custom styling controls so you can keep visuals consistent across episodes and channels.

Flexible timeline editing, keyframe control, and scene-level presets let you tune pacing, color, and motion to match brand voice. Can you import your fonts, color palettes, and lower third designs easily?

Seek tools offering multi-angle render options, realistic character animation, and dynamic scene generation that create new camera moves or alternate compositions from the same source.

Effective generative models will supply lip-synced talking avatars, stylized looks like anime or cinematic grading, and procedural backgrounds that replace green screen work. How much of your post-work can be handed to generative processes without losing creative control?

Prioritise platforms that support batch processing, automated scene adjustments, and quick rendering pipelines. Look for cloud rendering, scheduled exports, and integrations with social publishing tools so you can publish at scale.

Does the tool let you move from draft to final in a few automated steps while keeping multiuser workflows intact?

Pick a solution that exports consistently at 1080p and 4K, preserves frame rates, and maintains clean compression for motion-heavy scenes. Check for hardware-accelerated rendering, color management, and bitrate controls so visuals remain sharp and motion stays natural across streaming and download.

Will your final files play cleanly on:

Creating cool videos used to mean hours of editing and lots of technical know-how, but DomoAI's AI video editor changes that completely: you can turn photos into moving clips, make videos look like anime, or create talking avatars just by typing what you want.

It’s built so anyone can make engaging content without learning complex tooling, so you focus on the idea and the AI handles the technical work. Create your first video for free with DomoAI today!

DomoAI turns still images into moving clips and creates talking avatars from typed scripts. It favors ease of use over granular storyboarding, so creators can move from idea to finished clip without wrestling with timeline controls or complex scene composition.

You can switch visual styles from:

It pairs well with LTX Studio when you want fast social posts or experimental shots rather than cinematic scene control. The platform offers a free first video so you can test its creative workflow before subscribing.

Veo 3 generates natural-looking scenes and layers matching background sound from simple text prompts. It renders smooth motion, believable lighting, and environmental audio like crowd noise or traffic, which helps with continuity and immersion.

Templates and voiceover support make it approachable for content teams and single creators. Watch for:

Use it when you need prompt-driven, realistic scenes with quick template-based assembly.

Kling AI produces short, polished videos with cinematic lighting and camera work, such as pans and zooms. The output quality leans toward professional grade, with smooth motion and detailed render quality.

Limitations include short maximum clip length and missing lip sync in some cases. Pricing reflects the high quality and may be a factor if you need large volumes. Choose Kling when you want short ads, promos, or high-fidelity snippets without deep editing.

Luma AI focuses on speed and photoreal motion. It can produce many frames very quickly and uses motion that respects physical behavior for natural tracking shots and 360-degree moves. The platform supports multi-scene sequences so you can stitch longer narratives with consistent camera planning.

Some built-in editing tools are lighter than what editors expect, which makes it well-suited to teams that export to a conventional NLE for finishing. Use Luma when realistic motion and rapid rendering matter.

Pika animates uploads into short HD clips and specializes in novel visual effects: items sliced, crushed, or inflated, and other creative transformations.

It caps outputs around ten seconds, which fits:

The tool emphasizes effect-driven creativity rather than scene continuity or multi-shot storyboarding. Try Pika for punchy, single-idea pieces where visual novelty matters.

Hailuo AI converts text or images into 6 to 10-second cinematic clips with smooth camera moves like tracking shots. Characters hold consistent facial features and outfits across scenes, improving continuity and character persistence for short narratives.

Resolution support reaches 1080p. Some complex motion can still appear slightly glitchy, so keep heavy action modest. Ideal use cases include teaser clips and product showcases that need crisp framing.

Haiper AI interprets text prompts accurately and often produces lively, colorful videos with realistic motion and physics. It animates stills well and supports varied motion styles.

The UI keeps the process simple and accessible, which helps teams turn a script into a clip rapidly. If you need predictability and a balanced feature set between effects and realism, Haiper is a dependable choice.

Vidu AI responds quickly to detailed direction and handles artistic and cinematic looks with equal skill. You can convert sketches or images into animated sequences and preserve drawn characters across shots.

The editor gives fine control over:

This tool fits creators who want to guide style closely while avoiding deep manual editing.

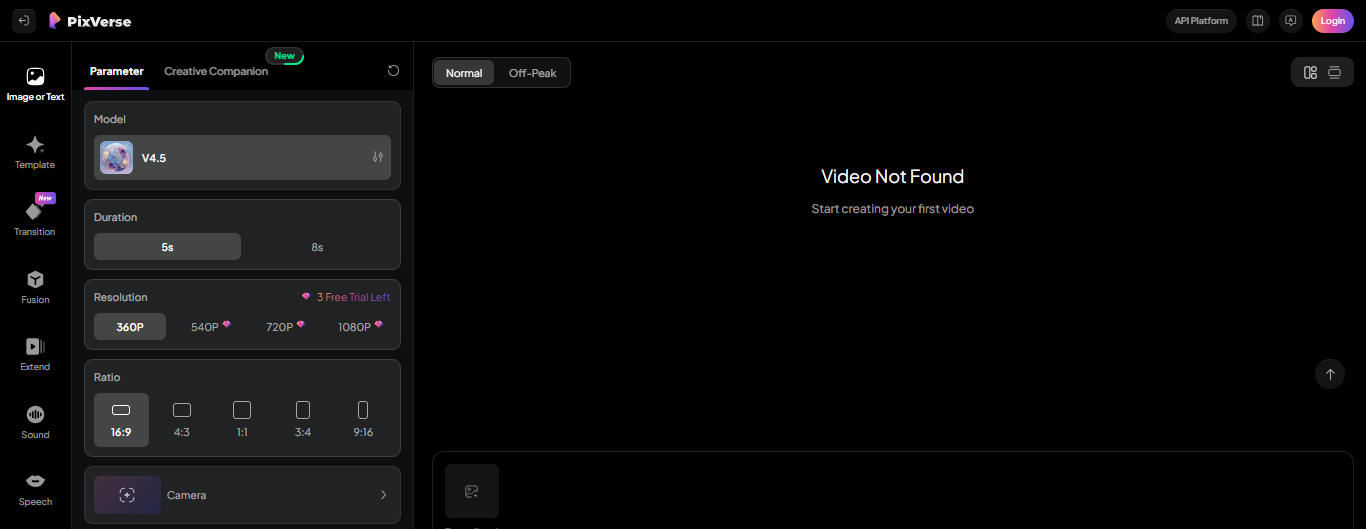

PixVerse AI offers many technical settings for fine-tuning render style, duration, aspect ratio, and special effects. It demands a learning curve but rewards users with granular control over the scene:

Camera controls feel limited compared with some competitors, so plan shots with that constraint in mind. Use PixVerse when you want to push creative control and already understand prompt-to-video workflows.

KREA strips the interface down to essentials so newcomers can generate clips quickly. It provides model switching to try different visual styles and has quality enhancement tools to boost output fidelity.

A free plan exists with usage limits, making KREA a reasonably low-risk starting point. Expect a straightforward script to video flow rather than an advanced scene timeline or persistent character systems.

Runway pairs text to video generation with a nonlinear timeline, versioned clips, and frame-level editing. It supports inpainting, scene continuity tools, and model options for different looks.

Teams use Runway when they need a hybrid workflow that mixes AI generation with conventional editing:

It scales well for collaborative projects that require both creative control and AI-assisted speed.

Pictory builds videos from scripts using stock footage, music, and voiceovers through template-driven workflows. It focuses on content production for:

It has simple editing steps and clear tutorials.

Users can add:

Pictory suits creators who prefer template-led assembly over frame-by-frame scene control and need quick turnarounds.

Elai generates video with digital avatars speaking scripted text and supports more than 75 languages. You can select from a diverse avatar library or request a custom avatar from submitted footage.

The workflow is efficient for localized corporate messages, training, or marketing where on-camera talent is costly. Elai reduces shoot complexity and helps scale personalized video production.

Hour One converts text into videos using selectable characters and themes, producing polished product and training content in minutes. It targets business users who need repeatable, scalable outputs without full production teams.

The UI focuses on speed:

This tool works well for enterprises that need consistent messaging across many clips.

Colossyan aims at workplace learning by letting creators build training videos using avatars, captions, and an editor designed for clarity. The platform makes it easier to produce studio-style material without a film crew.

Users report strong support and steady feature updates, while some symbol handling and edge cases can be improved. Pick Colossyan when you need efficient, consistent educational content with rapid turnaround.

.jpg)

Generative Adversarial Networks and diffusion models generate realistic visuals and entire scenes from text prompts, while transformer-based NLP handles:

These systems let an AI video platform convert a written brief into timing, camera moves, and assets automatically.

LTX Studio ties these engines together with real-time rendering, synthetic media avatars, and automated editing pipelines so teams can move from script to screen without a whole film crew.

Traditional production required weeks of planning, casting, location shoots, and long post-production cycles. The AI-enhanced workflow replaces many manual steps:

This shift has created remarkable efficiency gains, with businesses reporting up to 80% reductions in production times and costs through advanced video automation. These efficiencies allow brands to produce more content that drives sales.

LTX Studio and similar cloud video production platforms collapse pre-production and editing into the same session, cutting handoffs and storage transfers, and enabling continuous iteration.

AI video tools focus on three business outcomes that tie directly to revenue:

These capabilities integrate with ad platforms, analytics, and asset management systems so creative teams can treat video like a data-driven marketing channel.

LTX Studio exposes APIs for automated publishing and tagging, which makes creative output actionable for growth teams.

AI makes it practical to create many culturally tuned versions from one concept. Replace backgrounds, swap voiceovers, change wardrobe colors, and swap on-screen text to match language and cultural cues.

A cosmetics brand can produce French spots with lavender fields and Asian spots with tropical visuals from a single brief.

LTX Studio provides localized avatars, automated dubbing, and bulk rendering queues so teams can push hundreds of regional variants while tracking engagement by market.

Instead of guessing which headline or CTA will convert, generate dozens of creative permutations and serve them to small cohorts.

Automated metrics collection ties each creative variant to:

LTX Studio supports multi-version testing, variant tagging, and export to analytics platforms so marketers can identify winning elements within hours and allocate spend to the best performers.

When a trend emerges, speed matters. AI removes rendering and logistic bottlenecks so teams can add trend-specific lines, change music, or swap assets and relaunch quickly. A beverage brand used one master file to create 32 local edits and get them live in days.

Want to push a last-minute promo? Use text to video, automated voiceover, and LTX Studio scene generation to produce a new cut and redeploy across channels in under an hour.

Which part of your production pipeline would you automate first, and which KPIs would you track to prove impact?

DomoAI removes the steep learning curve and the long hours of manual editing. You load images or footage, type what you want, and the AI generates:

The interface looks like a simple studio control panel, and it guides you through choices for:

Who wants to wrestle with timelines when you can work from ideas instead?

Drop a photo into DomoAI, and the platform analyzes depth, facial features, and background to add motion and parallax. The tool creates natural camera moves, subtle breathing, and realistic lighting shifts so a single image starts to feel cinematic.

Want to use that old portrait for a short social clip or a promo card for a stream setup with LTX Studio style virtual sets? The output matches standard broadcast formats.

Type a few words and DomoAI applies style transfer to convert footage into anime-inspired visuals. You can pick color palettes, frame rates, and cell shading intensity to get anything from soft illustrations to sharper comic looks.

Use it for branded shorts, content drops, or experimental sequences that pair well with real-time overlays and virtual backgrounds used in professional studios.

Build a talking avatar from a photo or a 3D model and write the script. DomoAI syncs lip motion, facial expressions, and head turns to the audio it generates or to your uploaded voice track. You can route the avatar into a live stream or export clips for social platforms.

Want to create an on-camera host without a whole studio production crew? This tool does that and supports common avatar workflows used in cloud production and virtual production setups.

DomoAI fits solo creators who need quick content and teams that want consistent assets across channels. It supports multiple aspect ratios for Instagram Reels, TikTok, YouTube shorts, and broadcast safe exports for multicamera studio feeds.

If you run remote production or use LTX Studio for live events, you can craft assets in DomoAI and drop them into your scene with the same color and timing specifications.

The editor automates shot selection, timing, and color grade so you don't have to hand-tune every parameter. It interprets your prompt, applies stable motion tracking, and matches audio dynamics to cuts.

You can override any choice with manual controls, but most users finish a clean draft in minutes while keeping broadcast-ready standards for live streaming and cloud-based post production.

DomoAI exports standard codecs and frame rates that slot directly into live production tools and virtual sets. Use clips as lower thirds, bumper reels, or animated backgrounds inside an LTX Studio workflow.

The platform also supports quick renders for remote presenters, interactive overlays, and graphics assets that sync with multicamera switches and remote recording systems.

Create your first video with DomoAI at no cost and test features like photo motion, anime styling, and talking avatars. Upload a photo, type a prompt, and choose export settings that match your target platform.

Want to see how a DomoAI avatar looks in a live stream with a virtual set from LTX Studio? Render a short clip and run it through your broadcast software.