Table of Content

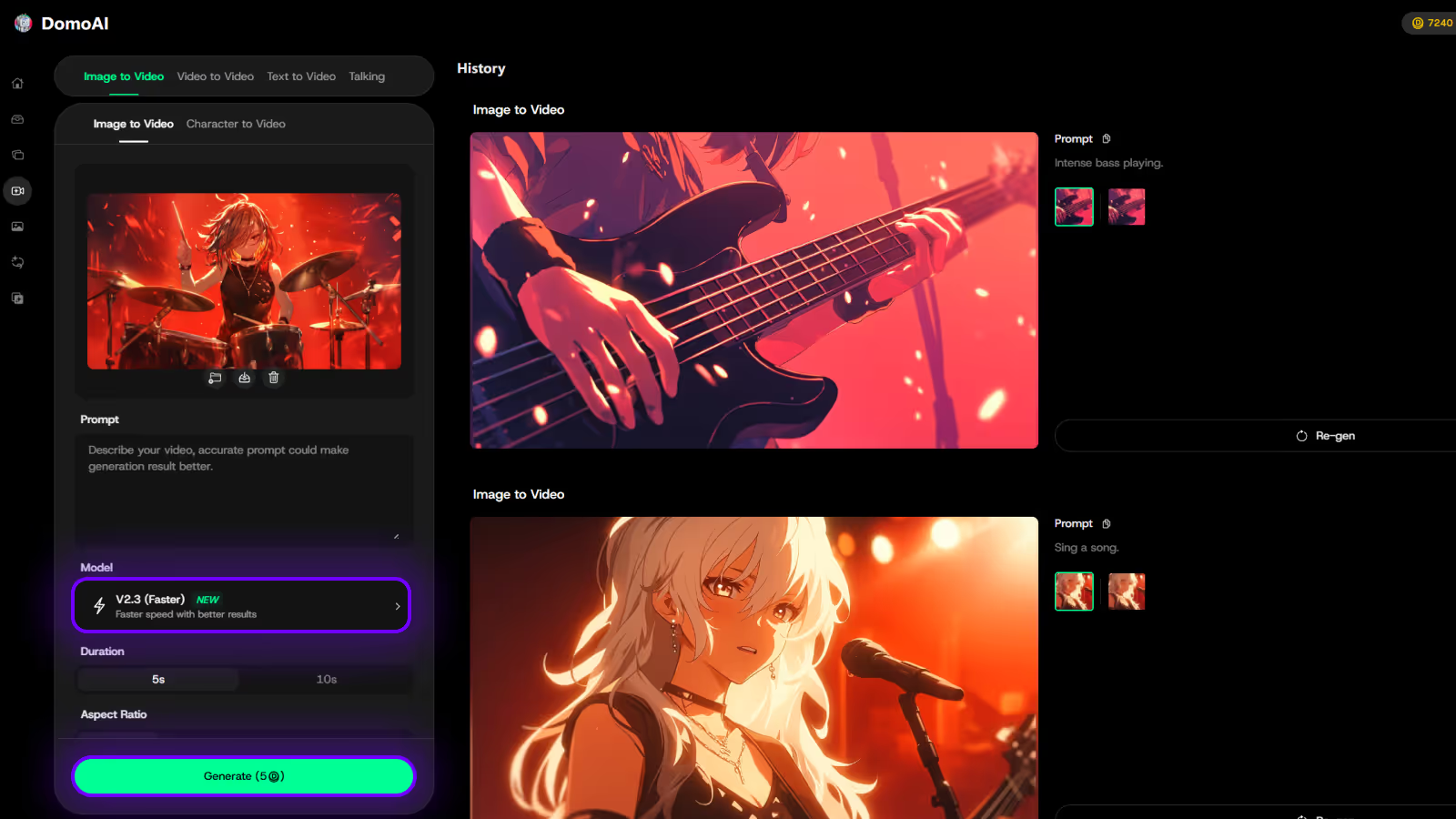

Try DomoAI, the Best AI Animation Generator

Turn any text, image, or video into anime, realistic, or artistic videos. Over 30 unique styles available.

Runway, a New York City-based AI video startup, has announced Act-One, an innovative tool designed to create expressive character performances within their Gen-3 Alpha Model.

This tool enables the creation of engaging animations using just video and voice inputs, significantly reducing the dependency on traditional motion capture systems. This makes it easier to integrate into filmmaking production workflows.

Runway's Act-One is a groundbreaking tool that allows creators to animate AI-generated characters using just a smartphone camera. By capturing facial expressions and movements from a simple video, users can transpose these onto digital characters, creating realistic animations without the need for expensive motion capture equipment or complex rigging.

Act-One is transforming the landscape of generative AI filmmaking by making it accessible for creators to incorporate real-life acting into their digital projects.

This tool enables filmmakers to produce high-quality animations that reflect genuine emotions and expressions, bridging the gap between traditional filmmaking and AI-generated content, making AI-generated characters more convincing than ever.

Runway has shared several videos and styles on their blog to demonstrate the various applications of this tool.

Without the need for motion-capture or character rigging, Act-One is able to translate the performance from a single input video across countless different character designs and in many different styles.

— Runway (@runwayml) October 22, 2024

(3/7) pic.twitter.com/n5YBzHHbqc

The workflow is remarkably simple:

Note: Access to Act-One is currently limited but will begin gradually rolling out to users today and will soon be available to everyone.

Runway's Act-One is set to revolutionize how we approach character animation in AI filmmaking. By removing technical barriers, it empowers creators with tools to bring their artistic visions to life more easily than ever before.

If you're looking to explore the full potential of generative AI in your creative projects, consider trying out DomoAI. It's another innovative platform that offers powerful AI tools, like image-to-video and AI anime video generator, for enhancing creativity and storytelling in anime filmmaking.

Recent articles

© 2026 DOMOAI PTE. LTD.

DomoAI